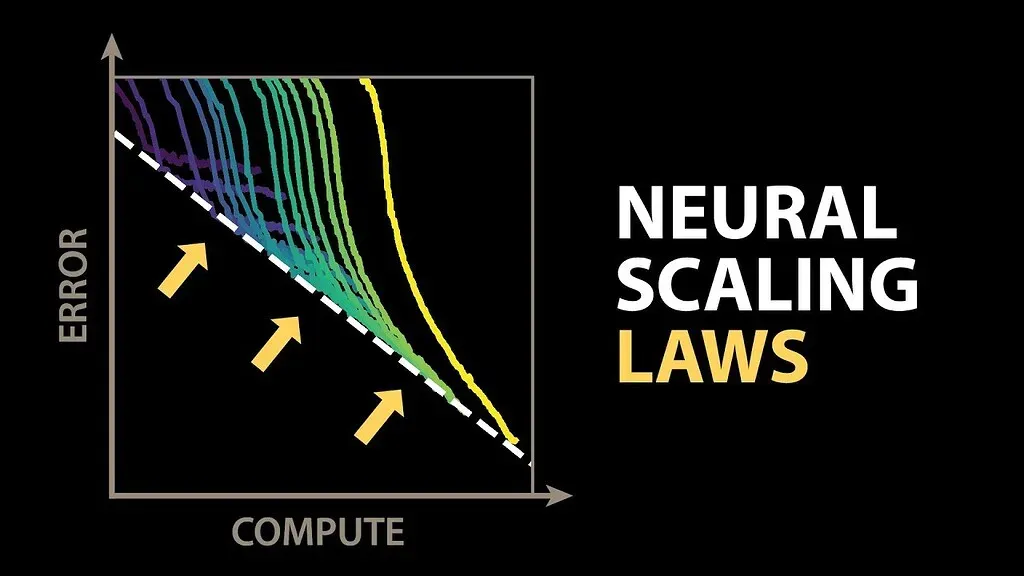

AI progress and scaling laws have become a central frame for understanding how machines learn, grow more capable, and shape expectations for future breakthroughs. The 2020 OpenAI AI scaling laws report, led by Jared Kaplan and including Dario Amodei, traced how increasing model size and data could yield substantial gains. It recounts milestones such as GPT-3 and GPT-4 and notes a slowdown in wide, rapid progress, even as GPT-4 capabilities continue to evolve. The piece highlights a shift toward strategies that squeeze more performance from existing architectures. As debates about AI ethics and regulation intensify, Newport argues for humility about promises while acknowledging that thoughtful policy work will be essential.

Viewed from another angle, the journey of machine intelligence appears as a timeline of capability gains, scaling effects, and ongoing refinements. This broader view shows how data availability, compute choices, and iterative tuning shape outcomes beyond headline milestones. Rather than pursuing ever-bigger models alone, teams invest in smarter training approaches and more efficient architectures to extract more value from existing systems. Alongside these technical shifts, conversations about responsibility, governance, and policy serve as essential guardrails for aligned, ethical deployment.

AI progress and scaling laws: tracing a contested trajectory from 2020 to today

Cal Newport’s Open Questions essay revisits the arc of AI progress, beginning with the 2020 OpenAI scaling laws report led by Jared Kaplan and Dario Amodei. The piece explains how early beliefs held that simply making models bigger and feeding them more data would yield inexhaustible improvements, a mindset that helped fuel expectations for broad, transformative capabilities.

This framing fed a narrative of rapid, almost exponential gains and contributed to optimism about artificial general intelligence. The discussion then shifts to how those hopes have evolved as the field grappled with real-world limits, prompting a shift toward post-training refinements and a more nuanced view of what scale alone can and cannot deliver. The essay emphasizes humility in forecasting while recognizing that significant changes can still occur, provided policy and ethics keep pace.

The scale-first era: how bigger models and data shaped early expectations

During the scale-first era, researchers prioritized increasing model size, accumulating vast datasets, and expanding computational budgets as the primary engines of progress. This approach marked a clear phase in the AI progress timeline, where larger models often yielded impressive benchmarks and new capabilities that captured public imagination.

Yet as the field matured, the limits of scaling became more visible. While bigger systems delivered improvements in certain domains, they did not universally translate into effortless, sweeping breakthroughs. The narrative began to acknowledge that progress might require smarter methods alongside bigger models, tempering earlier hype with a recognition of diminishing returns.

GPT-3 to GPT-4: milestones that reframed capabilities and hype

The leap from GPT-3 to GPT-4 stood as a milestone that showcased clearer capabilities, including enhanced reasoning, instruction following, and broader applicability across tasks. These developments reinforced the idea that progress in AI could be dramatic, but they also highlighted the boundaries of what current architectures can achieve without new approaches.

Even with GPT-4, the Open Questions around scaling laws remained: improvements plateaued in some areas, and the hype around universal, rapid gains cooled. Newport’s narrative suggests that these milestones should be read as important markers rather than proofs of an imminent arrival at general intelligence, prompting ongoing scrutiny of how we measure progress and value practical gains.

Slower progress and new questions: reassessing the limits of scale

Following the GPT-4 era, many observers noted a slowdown in broad, rapid progress. This pause prompted critical questions about whether simply increasing model size could continue delivering commensurate performance, or if new bottlenecks were emerging that required alternative strategies.

The slowdown sharpened debates about the universality of scaling laws. Skeptics argued that scaling alone cannot guarantee breakthroughs across diverse tasks, and that overreliance on bigger models risks overpromising what AI can actually deliver. The conversation shifted toward a more balanced appraisal of capabilities and limits.

Post-training refinements: squeezing more from existing architectures

As the pace of unsupervised scaling slowed, attention turned to post-training refinements designed to extract additional performance from current architectures. Newport highlights techniques and strategies aimed at improving efficiency, alignment, and task-specific capabilities without abandoning established models.

This pivot reflects a broader belief that incremental gains can come from smarter training regimens, data curation, and optimization methods. By focusing on how models are fine-tuned, aligned, and deployed, the field seeks to extend usefulness and safety without relying solely on ever-larger systems.

Skeptics and cautions: scaling laws are not universal

Among the voices challenging the hype were thinkers like Gary Marcus and Emily Bender, who warned that scaling laws are not universal and that progress can stall or mislead if expectations aren’t grounded in empirical limits. Their cautions helped frame Newport’s critical examination of what advances can realistically achieve.

These skeptical perspectives emphasize the need for rigorous evaluation, diverse benchmarks, and attention to non-technical factors such as data quality, alignment, and governance. They remind readers that hype can outpace real engineering gains, underscoring the importance of tempered optimism.

Beyond scale: new model families and architectural shifts

The narrative moves beyond a single focus on scale to explore the emergence of new model families and architectures. These shifts suggest that breakthroughs may come from structural innovations alongside scale, including different training objectives, data ecosystems, and retrieval-augmented capabilities.

New approaches aim to diversify the AI toolkit, potentially enabling more robust performance across tasks with different data profiles and application contexts. Newport’s survey implies that the path to future gains is likely a mosaic of scale, training discipline, and architectural experimentation.

Bigger systems vs smarter training: debates about future gains

A central debate concerns whether future improvements will come predominantly from bigger systems or from smarter ways to train and tune them. The piece notes industry discussions about allocating resources—whether to scale up hardware and datasets or to invest in refined training methods and alignment techniques.

This tension mirrors the broader question of how to optimize AI progress responsibly. The answer may lie in a hybrid strategy that balances selective scaling with targeted refinements, enabling sustainable advances without succumbing to escalation-driven hype.

AI ethics and regulation: guiding responsible progress

As the field evolves, Newport underscores the necessity of thoughtful regulation and ethical considerations. The trajectory of AI progress cannot be decoupled from governance, safety, and societal impact, especially given the potential for disruptive changes.

Discussions of AI ethics and regulation focus on building safeguards, transparency, and accountability into development and deployment. The essay argues that policy work must keep pace with technical advances to harness benefits while mitigating risks.

AI progress timeline: mapping milestones and turning points

Charting the AI progress timeline involves identifying key milestones such as the OpenAI scaling laws report, GPT-3, and GPT-4, while also noting periods of slower growth and renewed focus on refinement techniques. This timeline helps frame how expectations have shifted over the past few years.

Understanding the timeline also highlights turning points where the emphasis moved from scale alone to post-training refinements, alignment concerns, and regulatory considerations. It provides a structured view of how ideas about AI progress have evolved and where continued attention is needed.

Industry debates and policy alignment: steering growth with responsibility

Within industry circles, debates continue about the best path forward for responsible innovation. Organizations wrestle with questions about deployment, risk management, and the ethical implications of increasingly capable systems.

Aligning policy and industry incentives with thoughtful governance helps ensure that advances in AI progress deliver benefits while reducing potential harms. Newport’s discussion implies that ongoing collaboration between technologists and regulators is essential for sustainable growth.

Humility and disruption: Newport’s closing perspective on the path ahead

In closing, Newport advocates humility about promises of rapid breakthroughs, acknowledging that disruptive changes may still occur while urging careful policy and ethics work. This stance serves as a counterweight to sensational forecasts and keeps attention on responsible development.

The closing message emphasizes that progress will likely be uneven and contingent on social, political, and regulatory contexts. By maintaining humility and prioritizing thoughtful governance, the field can navigate potential disruptions while pursuing meaningful, beneficial advances.

Frequently Asked Questions

What are AI scaling laws and how did they shape early AI progress expectations?

AI scaling laws describe how performance improves as model size and data increase, suggesting predictable gains from scale. The 2020 OpenAI scaling laws report, led by Jared Kaplan with Dario Amodei, helped fuel expectations of inexhaustible improvements and even progress toward AGI, shaping early AI progress expectations. However, scholars cautioned that scaling laws are not universal, and hype can outpace practical results.

How did GPT-4 capabilities shape the AI progress timeline and scaling expectations?

GPT-4 capabilities, building on GPT-3 milestones, marked concrete steps in the AI progress timeline and reinforced the view that scaling up could yield larger leaps. After these milestones, the pace of rapid improvements slowed, prompting reflection on the limits of scaling and the value of alternative methods.

Why did AI progress slow after the early scaling optimism reflected in the AI progress timeline?

Progress slowed due to diminishing returns from size alone and the realization that scaling laws are not universal. This slowdown helped trigger a shift toward post-training refinements to extract more performance from existing architectures and spurred exploration of new model families.

What are post-training refinements and how do they differ from scaling up models?

Post-training refinements include techniques like fine-tuning, instruction tuning, and reinforcement learning from human feedback (RLHF) to improve capabilities without increasing model size. These methods provide meaningful gains by optimizing how models are trained and used, complementing scaling efforts rather than replacing them.

Are AI scaling laws universal, or do skeptics warn against overestimating progress?

While AI scaling laws describe general trends, skeptics such as Gary Marcus and Emily Bender warn they are not universal and that hype can overstate what scaling alone delivers. The debate underscores the need for careful evaluation and the value of multiple approaches beyond bigger models.

What do AI scaling laws and the debate between bigger systems versus smarter training imply for future gains?

AI scaling laws and the bigger-versus-smarter training debate suggest future gains will arise from a mix of approaches. Some advances may come from larger systems, but smarter training, data curation, and tuning are likely to play a crucial role, per the open questions about scaling versus refinement.

How do AI ethics and regulation intersect with rapid AI progress and scaling?

AI ethics and regulation are essential as progress accelerates, guiding risk management, transparency, fairness, and accountability. Thoughtful policy helps ensure responsible deployment while balancing innovation with societal safeguards.

What do GPT-4 capabilities imply for the future of AI progress and what milestones might come next?

GPT-4 capabilities imply that future progress may hinge on improved alignment, efficiency, and specialized capabilities, with milestones beyond GPT-4 on the AI progress timeline. The path likely combines new model families with refined training techniques, tempered by humility about rapid breakthroughs.

How should policy makers address AI ethics and regulation amid accelerating progress?

Policy makers should focus on safety standards, governance, and stakeholder engagement, ensuring that ethics and regulation evolve alongside capabilities. Proactive governance supports responsible innovation and helps manage risks as the field advances.

What role do new model families play in the AI progress timeline and the evolution of scaling laws?

New model families can reshape the AI progress timeline by offering alternative architectures and training regimes, altering how gains accrue and how scaling laws apply in practice. This diversification complements scaling efforts and expands the set of viable paths to improved capabilities.

Is transformative AI possible without exponential scaling, or will AI scaling laws still drive breakthroughs?

Transformative AI could emerge without exponential scaling, as progress also depends on smarter training, post-training refinements, and robust evaluation. AI scaling laws may still guide where gains come from, but the path to breakthroughs is likely multifaceted.

What lessons from Cal Newport’s Open Questions essay about AI progress and regulation can inform policymakers and researchers?

Cal Newport’s essay emphasizes humility about rapid AI breakthroughs and the importance of thoughtful policy and ethics as the field evolves. It highlights the need for careful regulation, clear governance, and balanced optimism to manage disruptive changes responsibly.

| Key Point | Details |

|---|---|

| Origins and framing | Newport’s Open Questions essay traces AI progress and scaling discussions, referencing the 2020 OpenAI scaling laws report led by Jared Kaplan with Dario Amodei. |

| Early scaling belief | The belief that larger models and more data would yield inexhaustible improvements, fueling expectations of AGI. |

| Milestones | Milestones like GPT-3 and GPT-4 are noted as markers of progress. |

| Slowdown and shift | After initial rapid progress, there was a slowdown, with a pivot toward post-training techniques to extract more from existing architectures. |

| Skeptical voices | Skeptics such as Gary Marcus and Emily Bender argued scaling laws are not universal and hype can overstate progress. |

| Debates about future gains | Debates about whether future gains will come from bigger systems or smarter training/tuning; emergence of new model families. |

| Risks, ethics, and regulation | Rhetoric about transformative potential is balanced by concerns about hype, regulation, and ethical considerations as tech evolves. |

| Takeaway | Newport emphasizes humility about rapid breakthroughs while acknowledging disruptive changes may occur and policy/ethics work remains essential. |

Summary

AI progress and scaling laws shape how researchers view the pace of artificial intelligence progress, and Newport’s Open Questions piece traces the shift from scale-driven promises to post-training refinements that squeeze more performance from existing architectures. The discussion surveys optimistic and skeptical perspectives on whether future gains will come from bigger systems, smarter training, or new model families, and highlights the ongoing need for thoughtful regulation and ethical considerations as the field evolves. A measured approach—recognizing potential disruption while prioritizing policy and ethics—remains essential in navigating AI progress and scaling laws.